For a long time I have been in and out of Microsoft's smartphone ecosystem with me buying my first Microsoft Windows powered device back in 2005 when I was 13. Back then they were called Windows Mobile phones.

I got my first Windows Phone, a HTC HD7, in 2011, and it feels like a lifetime ago. It was then that Microsoft announced that Windows Mobile was to be replaced by this new, more sleek and modern operating system known as Windows Phone 7. At first it was a great operating system, mainly because it was different to the competition, but within no time at all, I started to see the err in my ways choosing a device powered by Microsoft's operating system. Months into my Windows Phone 7 device there was still no Facebook, and half of the other most useful apps had no intention on coming. The big update known as Windows Phone Mango was supposedly bringing sweeping changes that would improve the device but it was a long wait for something that you weren't even sure would fix the issues.

Microsoft entered a market controlled by two large companies who had actually been their rivals in other markets before now, Apple and Google. Microsoft's corporate business model was their only strength here; the other two were focused on the overall dominance of the smartphone market, whereas Microsoft, with the Office brand amongst other things, could focus on making their devices more suited to the enterprise market.

Unfortunately for Microsoft, they actually went down the route of trying to sell their phones to the average user. This created a variety of different problems for Microsoft because instead of focusing on their enterprise market, they had to cater to everyone, much like how Apple and Google did with iOS and Android. This made them just another smartphone operating system manufacturer, and they lost their own identity trying to copy ideas from their competitors.

My HD7 was the only smartphone I have ever paid to get out of early, simply because the operating system was so bleeding awful. The phone itself was actually really good however.

Windows Phone 10 came out and it's release was a surprise to me, as I had thought by that point Microsoft might have realised that there was no point in continuing with something as dreadful as it. Microsoft even went as far as to buy the Lumia line from Nokia and tried to market them as Microsoft phones.

Nothing worked for them. Windows 10 Redstone 2 was released as a big update and a promised Surface Phone was rumoured. People actually thought that it had a chance of becoming something, but no. Nothing came of it, and this article that I have written was inspired by another, which also talks about how the fate of Windows Phone is a sad one.

Shortly after I built my latest PC, the Red Revolution, AMD released their Radeon VII cards. These were designed to compete with Nvidia's stiff competition that just gets stronger and stronger. The main focus of these cards were Nvidia's RTX 2080 cards which have allowed Nvidia to hold top dog position in the GPU market, with AMD more focused on the budget builder, or those just looking to save a bit.

AMD haven't done that as of yet, and the RX5xx series are a bit dated for someone who, like me, is building a new PC. My card in my gaming PC is an AMD Radeon HD 7950 and it's well and truly dated. But I've got brand loyalty, I've always gone for ATI/AMD cards as long as I can remember, because there was once a time when ATI cards were superior in many ways to Nvidia cards with the latter having troubles with overheating and the former having trouble with software.

It's time AMD launched their next generation of graphics cards. Mainstream cards are my focus, I had a high end one with this one and I probably could have just stuck to mainstream, as it replaced a Radeon HD 5670 which was extremely mainstream.

I'm holding out for AMD's next generation of cards in the hope I can get a mid-range card for a lot less than what I paid for my 7950, but I'm not impressed.

Does anyone know what's going on with the next generation of cards?!

My 19th birthday present, a Fujitsu T4410 tablet PC, specifically bought for taking to university with me, has lay in the same place for months now, awaiting a new SSD after the scandal of OCZ drives failing (no wonder they disappeared).

But something that I cheaped out on when buying the tablet PC (it was already £1000) was both the CPU and the fact it didn't have 3G. I had hoped to upgrade my tablet to include a 3G card and bought over £250 worth of cards in the hope that one of them would work, including a Sierra Gobi 2000 that actually came from a Fujitsu Lifebook. Alas, none of them worked and I figured out that it was more than likely because the BIOS was locked to prevent it being added afterwards. I knew that there was really only one way to fix this, buy a new computer. I wasn't doing this in a hurry.

However, at the start of the year I was looking for a new battery for my tablet since the one I have now only holds about half of it's full charge, and whilst I was at it, saw a motherboard, which conveniently featured a better CPU and 3G/UMTS card support. It was also only £26. I decided to give it a go.

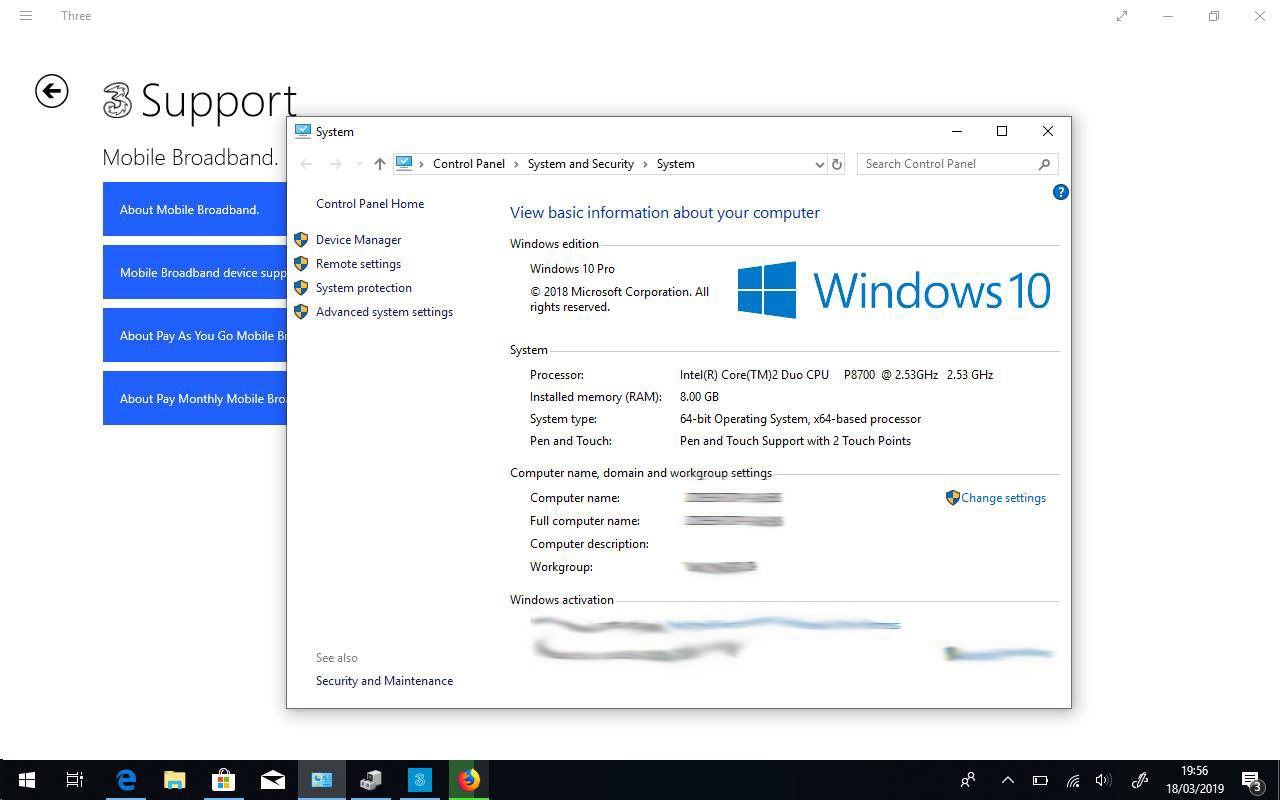

Finally, after about 5 hours of ripping out the insides of my 2010 Lifebook, I have managed to breathe new life into it. My once T6570 powered tablet PC now features a P8700 (lower TDP, better performance, longer battery life) and a new 3G card! Although this was more of an experiment, it was also to help bring back power to a laptop I love owning, even if just for fun or my own reasons. It has been a fun experiment that worked really well.

This post is written for a particular family member who wanted to know what this means.

By the year 2025, BT aims to abolish the good old POTS or Plain Old Telephone Service, also known as the Public Switched Telephone Network or PSTN.

Wait? What?! Does that mean no more landline calls?!

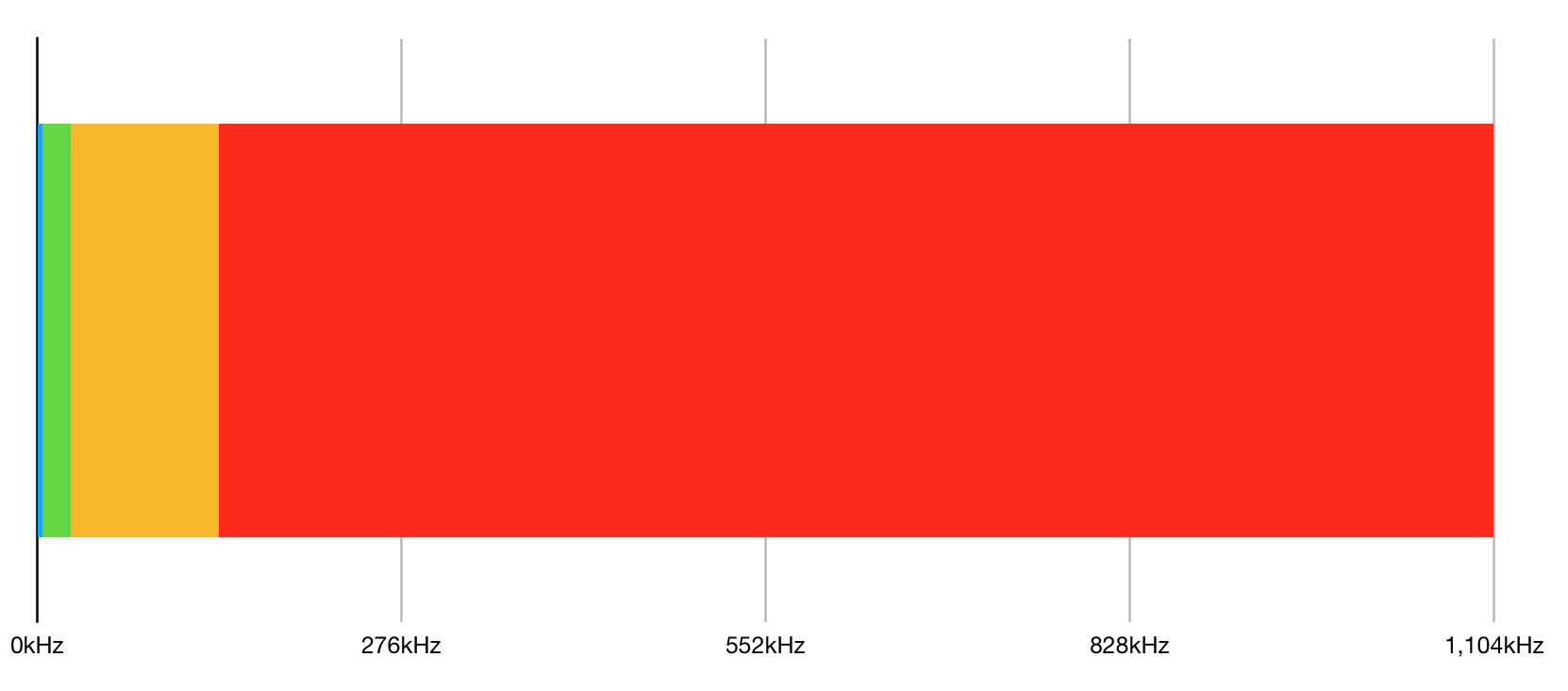

No. Let me explain how the system works presently. We have a hybrid system where our internet is sent on approximately three-quarters of the bandwidth of the line that we use. We use Copper To The Cabinet* (CTTC), Fibre To The Cabinet (FTTC) or Fibre To The Premises (FTTP) to deliver an internet connection. Broadband as it is called, refers to the fact that three-quarters or more of the cable are given to the internet whilst the remaining quarter is given to the phone line or PSTN.

When broadband first arrived, to cope with the increased internet speed and the ability to use the phone and internet at the same time the phone signal was compressed. This meant it went from taking a full 100% of the bandwidth to just about 0.5% (0kHz to 4kHz). The rest of it went to the internet connection where 87.5% of the line was given to download (138kHz to 1104kHz) and the remaining 10% to upload (25kHz to 138kHz). Finally, to ensure that the analogue PSTN does not receive interference, there is 2% of the bandwidth reserved between it and the upload. Remember, the way that these signals are transmitted is as frequencies in pulses. This also explains why upload tends to be a lot less than download. Below is how this is all separated out:

As you are no doubt aware, removing the copper cabling that is in use at present and replacing it with fibre makes the bandwidth increase so faster connections are available.

The abolishment of the PSTN from the signal would have an increase on speeds because that existing 25kHz would allow the internet to use that instead.

So what would happen to phone lines?

The purpose of this article was to inform someone in my family of what we have recently chosen to do in our own home - abolish the PSTN from it. Yeah that's right, as of this week we've got no PSTN telephones in the house and we now use a PBX powered by Asterisk (that I setup back in November for my own line) and a bunch of SIP phones. Businesses have done this for years, but the flexibility of these phones is what makes them great.

When BT gets rid of the POTS and PSTN, we will all use phones running on VoIP or Voice over IP technology, basically internet phones. But that doesn't mean you need to buy any new phones, BT or whoever is in control of the phone line in your home will need to provide a compatible option to connect these phones to the new VoIP network.

I'm a bit of an expert now on SIP, VoIP and the PSTN so if you've got any questions just fire away!

*officially, Copper To The Cabinet is not a thing, it's just what I've called it here!

Just a few days ago I was saying how Microsoft is becoming one of the tech greats again - Windows 10 finally feels like a decent OS (although it never will be macOS or Linux and will always have flaws at it's core), their cloud platform is really quite something, opening the .NET library to everyone and making it cross platform and their awesome work with the latest version of Microsoft Office. Then they pull a stunt like buying GitHub.

Microsoft has always been very committed to GitHub since apparently they made the most contributions to repositories, which is nice to see, but it's sad to see a company, whose CEO once referred to open source as a cancer, whose main products are all proprietary, paid for, non-open source, buying a company who is committed to making open source a big thing.

Of course I use GitHub - who doesn't in the software development world. I have my own private repositories where I store the latest versions of Dash and ZPE amongst other software but from my point of view, particularly from the point of view of integrity, I am worried about the future for GitHub. If Microsoft pushes new restrictions as they have done in the past (for instance, the shutting down of the new free, non-Microsoft developed Halo 3 remake on PC) then GitHub may not be the place for open source developers to put their faith into.

I'm not being critical of Microsoft here, by the way, I'm just pointing out that I don't think their $7.5 billion purchase was the right move for the community.

It's true. We're finally on to BT's Superfast package! Finally our internet connection at home is fast enough to download a server backup each day without sacrificing the whole connection.

After doing a speed test this morning I have noticed that we've had a huge increase in speed. From 12Mbps all the way up to as high as 48Mbps, we're getting a much better all-round experience.

Upload isn't bad either - we're getting 8Mbps which is 16 times faster than the 0.5Mbps upload we got yesterday!

I thought I'd share some Linux wisdom with you all. Today I'm talking about symbolic links.

Until recently I have been making my live site a direct duplicate of all content of the development site. This meant that I needed to have two copies of all static files. Uh oh. For instance, my photo gallery on my website is about 400MB in size, so that's 800MB used for the photo gallery between the development site and the live site.

Overall, the method described is expensive and isn't necessary. I have been for quite a while considering symblinking the two to avoid static content being duplicated. Alas, it has been done. I now have a new section on the web server called user_content - a place where all user content that is identical between the live and development websites will go. This not only simplifies the copying of content by no longer needing a manual copy between the development and live sites, but it also reduces the storage space that was wasted with the old design.

For example:

ln -s /www/user_content/jamiebalfour.scot/files/gallery /www/sites/jamiebalfour.scot/public/live/gallery/content

simplifies the whole process of the gallery updates on both the development and live sites.

Overall, using symbolic links has made huge differences to my web server.

Today I attended the Amazon AWSome conference and today I decided in the next few weeks I will move over to use AWS in more and more of my projects.

The conference was very useful because it gave me an insight into how I would use AWS but it also covered the basics of getting started and how I can migrate to the Amazon cloud service. I found the talk interesting and I found that the presenters were well informed on what they were speaking about and within the first part of the day I decided it's time to move to using it.

So what did I learn? Well, perhaps most crucially, I learned that it's not as daunting as I first imagined and that they have most of the features I currently have available from day one. I also learned that it's not going to be overly expensive to make the shift - perhaps cheaper in the long run too.

For a fairly long time (since I moved into my halls of residence in 2013) I've been obsessed with something more than computers. Phones.

I'm not talking about smartphones, I'm talking about IP phones. VoIP or voice over IP is something I've been interested in since about 2003 when I got to use Skype for the first time. I found it incredible that we could do these things and Skype just kept getting better and better. But Skype had and still has one problem - you need a PC or some other device running the software to use it.

As I mentioned, in 2013 I got my own IP phone in my halls of residence. I had longed to be able to test one like this so it was really awesome to get to test it for the first time. I had a Cisco one if I remember. About early 2014 I spoke with my father about getting an IP phone for the house and moving away from the standard PSTN (Public Switched Telephone Network) and with both of us being in some part of the computing industry (we both work with networking regularly), it seemed only right that we now trial this technology at home. In both of my previous offices, I got the wonderful experience of using IP phones, in particular, my last job, where we used Grandstream phones which had all the bells and whistles. I looked the phone we had at work up and to my surprise, it was only £70 odd to buy one on Amazon.

Last Friday we officially began moving to IP phones across the house (and it's all been down to me to do it, but hey, I guess I've managed, even though it's been completely a new concept). SIP, or session initiated protocol, is the backbone of our new network. Our network is powered by a Raspberry Pi which handles all outgoing calls currently and will soon deal with incoming calls. Our original DECT analogue phones will be slowly moving to SIP, with one set of them moving this week and one will remain on the analogue line until we transfer entirely to SIP. My bedroom will use my new Grandstream GXP2170 (which was only £100 so only £30 more than I was going to pay and I got a much better phone). I'll also be finally getting my own private number in the house.

As it happens, BT wants to switch the whole country from the basic PSTN to an IP based network to make it more competitive and it's a good move too. There's more about this change that BT wants to bring to us here.

It's often been laughed at by my friends, but now it was too much (or too little). Our broadband connection was abysmal - often only achieving a paltry 2Mbps (0.25MBps). This meant that simple things like SSH couldn't even work properly and would often timeout.

I will point out, where I live was one of the pilot locations for broadband when it first came to the UK, so we had faster broadband than quite a few people at the time. On October 26th we decided to complain about how slow our internet connection has become. During a complaint about the speed, we were told that an engineer would be out to have a look. The engineer did arrive and mentioned about the speed that we were able to get superfast broadband (up to 56Mbps or 7MBps) now according to the system. We were, of course, delighted, although a remained somewhat sceptical about this, but we decided to move to it straight away.

Now the fun starts. We were originally promised that it would be Tuesday the 31st of October and there would be absolutely no disruptions to our service until then. We were sent a BT Home Hub 5. Then within a few days, a new BT Smart Hub (aka the Hub 6). We were told to disconnect the old router and connect our new BT Smart Hub. We immediately lost our internet connection. On the 31st of October, after getting all excited for our new superfast connection, I was let down.

On the 2nd of November, after having had no internet now for a week, meaning no maintenance on my web server or anything and only one backup downloaded in that time (thanks to my friend Calum, who allowed me to download a backup at his place using his superfast connection) I was beginning to get weary. We were annoyed that we had no superfast on this day but when we phoned BT to find out why, there were a bunch of excuses, but in the end, we figured out the main problem - the line wasn't supported. Wait a minute, didn't the say it was?

What BT said originally was not true, our house was not suitable for fibre; because we did not have a connection to the cabinet but a connection direct to the exchange, bypassing the need for the fibre-enabled cabinet.

So we were then promised the following Tuesday (7th November) for our superfast connection. I again remained extremely unsupportive that this would be all done and dusted by then. My dad, on the other hand, kept firmly believing that this would happen.

As you guessed it, we didn't have it on Tuesday 7th of November, but we were told it would be there on Thursday the 9th (the day I was originally meant to be moving it my own house but that's for another story). We were then told on Friday the 10th that it wasn't going to happen then and would definitely be in place by the next Tuesday (14th).

All in all, I'm just so angry with BT for messing us around. I once believed they were a good company but this has changed my opinions entirely.