As a developer, there is one thing that is at the top of my list of things that I need to decide on - the text editor.

The development environment needs to be pleasing and make you feel comfortable (whilst developing Dash I feel quite the same way, if the content management system isn't user friendly, you can't be comfortable using it). I've been through a lot of editors - starting with a bunch of versions of Visual Studio, including Visual Studio 2005, 2008, 2010 and 2013. They are all brilliant and I'm glad that I made the choice to use them for about 7 or so years whilst I was a .NET developer.

Things changed quickly though as I became a developer based on Mac OS X. I was forced to find a new editor that suited my development purposes. When I stopped developing in VB.NET and C# and began developing Java, HTML, CSS, JavaScript, PHP etc. I found that I needed to find a new IDE that would suit those purposes. For the vast majority of those (all the web based ones) I used Aptana Studio 3. Aptana was brilliant but it quickly felt dated but I just could not afford the time to get a new editor without being certain that it was right for me. A good IDE needs to be extremely colourful (because that helps highlight different syntaxes), be fast and not prone to crashing (as Aptana eventually started doing) and be feature rich. For me one of the most important features of the IDE is support for SFTP. Aptana offers this out of the box. I then moved from Aptana to Eclipse with the Aptana plugin - pretty good to be honest.

Eclipse is brilliant for Java development, and I still use it because it can compile a JAR file in so few steps, it can interpret and debug programs well and it just feels like it was designed for Java. However, Eclipse was eventually laden with the same bug that Aptana has and would crash from time to time - particularly when in the Web perspective.

So I made another move, this time to Adobe Brackets. I jumped on the Brackets bandwagon when it was pretty young, and I loved it. Syntax highlighting is lovely, it's feature rich and it's open source. Unfortunately, this jump was too early - Brackets just didn't have everything I needed. In 2015, I started an Adobe Creative Cloud subscription. As a result I gave Dreamweaver a try and I liked it (looking back, I don't know why I liked it really other than the fact it had SFTP built in).

Introducing Atom

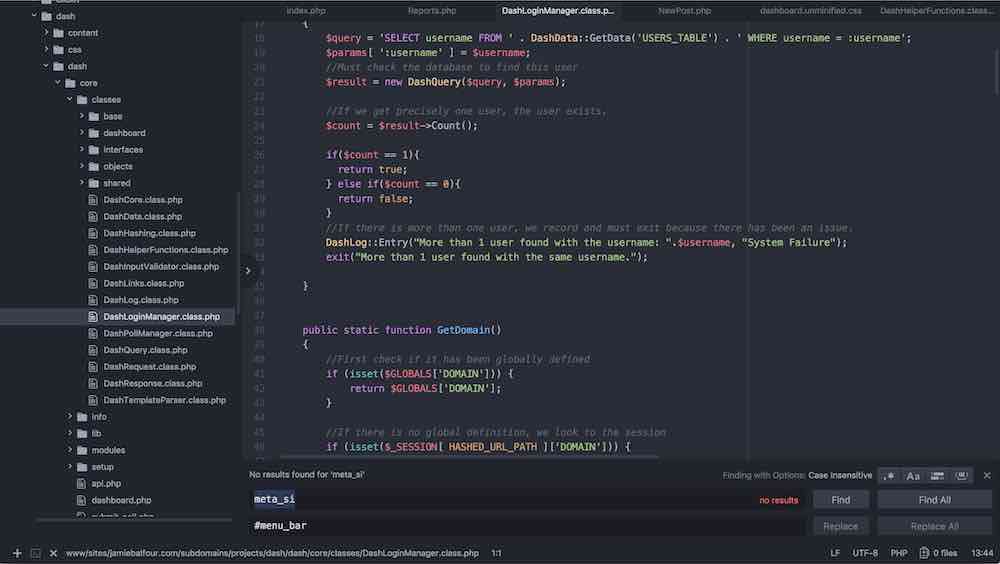

Atom is now my favourite text editor. After being introduced to it by a colleague at work, I feel like I've come to love it. It's colourful, well designed, doesn't crash and has everything I need from a text editor or IDE.

Why is Atom nearly the perfect editor though? Well my first reason is that Atom has clear colouring - it's dark interface clearly defines the background from the foreground and its syntax highlighting is bright and stands out well. On top of this, Atom features a plugin system that means that if the feature you want is not available, it's likely to be available as a plugin somewhere. Atom is fast - it doesn't slow down too much as files get larger - I'm talking about PHP files, which I always break into logical files which rarely exceed 3,000 lines.

People may say what about Visual Studio Code, since being from a Visual Studio background surely I'd like that? Well yeah I do. But I found Atom to be even nicer.

I think that if you are reading this and looking for a new text editor with a beautiful touch to it, Atom is well worth a try.

If you have a different favourite, I want to know what your favourite editor is.

Whenever I am asked why I bothered building a personal website or why someone needs a personal website my reply is often something along the lines of 'it's fun' or 'it's my hobby'. But I very rarely touch on the benefits of my personal website.

There are a huge number of benefits to my own personal website. I get around 500 visitors a month on my website. I use it to showcase my work to potential employers, to get myself on the internet in a public way that people can connect with me through but there are also other things. I enjoy learning and teaching, so my website is also a source of information where I put tutorials to help others learn stuff that I know.

But really what's the benefit? My first answer is that it's professional. The brand that my website pushes forward gives me a uniqueness that appears on all of my work now. The orange and blue theme of my website is also apparent on my CV, any letterheads I send and on certain emails. This looks highly professional and people like to see this. I also believe that having your own brand puts you above others who do not.

The second reason that having a personal website is that the website is, well, personal - it's all about you. LinkedIn is great for connecting but it's full of other people too. Go to jamiebalfour.scot and who do you think you are reading about? That's right, some guy called Jamie Balfour. There's nothing about John Szymanski or Murray Smith on there (well there might be). This keeps the reader focused on you. You can write soley about how good you are and all of your achievements and yeah, be a narcissist, blow your own trumpet!

The third reason I would say having a personal website is a must is because it gives people an easy way to read about you. A personal website allows people to read about you from all corners of the globe. Social media is great, but it's also ladden with other things, like other people, a like LinkedIn.

I will admit my website is more of a personal project that evolved into something more. For anyone in computer science it's pretty nice to show that you can build a website from scratch, so I did exactly that (it shows a lot of perseverance too).

Ultrabooks are amazing devices - combining high end mobile computing power with a slim design and decent battery life. The first ultrabook released was probably the MacBook Air, a moment I remember like it was yesterday. I've always really liked them and have always had an interest in them but never went out and got one.

In the last few years I've been following the development of one or two of them but one came to my attention - the Razer Blade Stealth. This ultra portable features two USB 3.0 ports and one USB-C port. The USB-C port also supports 40Gb/s over Thunderbolt allowing Razer to take advantage of the PCI-Express standard built into the laptop itself. As a result, Razer have developed the Razer Core, an external dock which features a full PCI-Express x16 slot in it. This allows you to insert a discrete desktop graphics card into the dock and use this as the laptops graphics processor. This is why this Ultrabook excited me so much.

Now the latest Razer Blade Stealth has arrived in the UK and is available to buy from their website.

The thing is I'm saving my money and until I decide to sell my MacBook Pro, if that ever happens, I cannot be buying this laptop.

A couple of months back I was the victim to a website (not to be named) that was hacked and ultimately gave the information of it's users away that ultimately included my information. The reason behind this was that passwords were not stored in a effective manner. This meant that the minute you have access to the database you have access to all of these passwords.

What this now meant for me was that they had my email address and my actual address and began to subscribe me to many things I would never sign up to whilst also sharing my user name and password details on the web. It's a cold and horrible thing for someone to contemplate doing because I had done nothing to them in the first place for them to launch an attack at me. And to be honest the website who was a website who's sole duty was to help others - so it's pretty cruel to do that. Anyway, storing details about people in a secure manner is an important factor of online security.

What an unsecure database may look like

In a world where security is not a thing, algorithms such as the SHA (secure hash algorithm) would not exist in the field of security. In fact, the field of security would not need to exist. But unfortunately, because there are people who want to either steal something or just for the sake of it damage something, we have to compensate for this by developing secure ways of storing information.

In the world without security however, passwords could be stored as plain text - simply as they were typed in to the text input. This means that anyone who has access to the database can then scroll down to the appropriate field and read their password. Unfortunately however, if a hacker gains access to this information, they have access to the raw password - that's the password they can use to login to the system. This is not good. So database designers and web developers and so on go a step further and use some kind of algorithm to conceal the password.

How to store sensitive data effectively

When data like a password is put into the database it should be encoded using some kind of algorithm.

The first way of storing passwords is to create or use an encryption algorithm to encode the password and a decryption algorithm to re-obtain the password from the cipher text. This method is uncommon because it means that there is at least one method to decrypt the password in the database, and therefore leaves open a security vulnerability (if someone obtains this decryption algorithm and the key needed to decrypt the passwords, they can simply decrypt every password and it's easy enough to figure the key out if you have a password and it's cipher text).

The most common algorithm is the SHA because it's been guaranteed to have a one-to-one mapping from the plain text to the cipher text - meaning that no two passwords generate the same cipher text. When this algorithm is applied it is designed to be irreversible, that is it is impossible (or at least near impossible) to figure out what the original text was (at least without going through each combination of characters and testing it against the cipher text). This method is more secure than the former since it does not offer a quick way to take a cipher text and turn it to a plain text.

These are just two ways of storing passwords but you can probably find other ways. I use a combination of both on my website (my own hashing algorithm and my own encryption algorithm on top).

Windows 10 was an amazing operating system for a few days when I first installed it on to my gaming PC. My gaming PC, The Zebra X2, is a beast of a machine which can run most games that I play like Starcraft II and GTA V in the highest available settings (Core i7 4770K, 256GB SSD, 8GB DDR3 RAM and an AMD 7950) but latterly it struggled with simple things like starting up.

After I installed Windows 10 the machine ran fine. However, one day when I was playing a favourite game of mine, Command and Conquer 3, I noticed a slight drop in framerate from playing it the time before. I didn't think too much of it at the time but gradually I noticed that each time I played this game it was getting worse. At the very end before I ridded myself of Windows 10 it was running so slow that when I used the graphics intensive Ion Cannon superweapon the game would just freeze and the animation for the superweapon would not be shown. The game would resume after the Ion Cannon blast was finished. So what the heck was going on?

My initial thoughts were that the hard disk drives that I stored my games on were starting to fail. I tested them all with SMART tools and none of them showed any signs of failure. I then assumed that it was my SSD so decided to install an old SATA III HDD into the system and installed Windows 7 on to it. It ran fine. I upgraded it to Windows 10 and again, it ran fine. So I assumed it was the SSD. I left the SSD in the system just disconnected.

After time, the same weird thing happened to my system - it began slowing and the graphics were getting messed up in games. So now I assumed it was the graphics card or the PCI controller that had failed on me. I took the GPU out of the system and used the dedicated graphics built in to the CPU. The system ran just the same so I now knew it wasn't the graphics card that had failed, but wasn't sure if it was a motherboard fault such as the PCI controller or the memory controller.

I decided to reinstall the SSD and flash my BIOS. Clearing the BIOS meant that I could set it back to the factory defaults and test it with them (I had tried this several times before but to no avail). Nothing changed.

My next choice was to clear the SSD and install Windows 7 on it. After reinstalling I panicked slightly as it wasn't working well at all with the Desktop Window Manager crashing on startup. After installing Service Pack 1 everything seemed to work perfectly. I would like to say that Windows 7 was the solution but I can't be sure.

I would probably put the problem down to several things: Windows 10 was clogging up the system (don't know why), the original BIOS was not designed for Windows 10 and would have required an update (I have since updated again and may try it again in the future with Windows 10) and that Windows just needed that little reformat that us Windows users need to do on a regular basis.

My fix appeared to have come from the reinstall of Windows 7 and the BIOS reset. I will keep everyone up to date with my progress with Windows 10 again in the future.

The Windows 10 upgrade tool can be a pain!

Due to the upgrade tool in Windows 7, I have been upgraded to Windows 10. This time the system appears to be running well - that is at least in comparison to how it was before. I will keep you posted when it begins to slow down again (if it does).

The future of physical connectivity in computer systems looks very limited. One day in the future I can foresee all devices connecting with a connection not too dissimilar to USB Type C. The reversible USB connector that was released a year back with the new MacBook was received with both positive and negative responses. For me, it was an incredibly positive product since it does a lot of things in one.

Apple just didn't get it right by releasing it with just the one connector. At the moment, adapters are still not everyone's cup of tea. In fact, for most people, adapters never will be a good solution.

Anyway, the main point of this post is not talking about the MacBook, it's talking about something that I feel strongly about, physical connectivity.

One connector for all...

I don't really like this one connector for all since I've always liked the idea of different connectors for everything.

You know, I remember when I made the switch to FireWire over USB about 7 years ago, I thought that buying all my drives with FireWire would be great since it's going to be the future of data connectivity. I know I was late in coming in, but I didn't expect it to be removed from all of my devices within a few years! I mean take my Macs for instance, my Retina MacBook Pro does have a Thunderbolt to FireWire adapter available, but this isn't ideal and it's expensive. My Mac Mini does have a FireWire 800 connector on it but the new models also require the adapter since they no longer feature FireWire on them. My PC is just as annoying however, since it doesn't even have a FireWire header on it. My previous PC (the Zebra, built in 2011) featured a Gigabyte GA-Z68X-UD3 motherboard which had 3 FireWire connectors (including headers) and then all of a sudden, an upgrade 2 years later to a Gigabyte GA-Z87-UD3H motherboard and a Haswell architecture, and I've suddenly got no FireWire connectors.

Actually, one of the most annoying things is all of my PCI cards (not PCI-Express) which I've been using since about 1998 when I got my first PC, including a really old, but still useful, video capture card (circa 2000), no longer work on my system since there are no free PCI slots in my system (I have a serial port card and a TV tuner in them).

A single connector for all also bring about the concern of overloading buses or whether or not everything is polled (as USB is). Speed can become an issue when one connector is used for everything.

However, one connector for all is a good thing too, since every device you use will use that connector and it's easy to remember what you need to use the device (i.e. a USB-C cable). But many companies, such as Apple, are difficult and try too hard with their own connectors and make the one for all difficult. Look at the Lightning connector for example, every other smartphone uses Micro USB 2.0 or Micro USB 3.0 meaning you can share your charger with any other smartphone user; that is everyone except iPhone users. It never works. One for all is too difficult.

Complications also arise when you are working with very specific applications. I for one still use the 1980s RS232 standard for many things such as electronic circuit boards for experiments (although I'm looking into using a RPi for this in the future) and for control commands for my projector. With a connector like USB-C, this becomes more complicated since RS232 was a highly simple connector, it becomes harder to emulate old standards.

Another even more annoying thing is having to buy an adapter to make it work with your older devices such as serial port devices. These devices may be hard to come by, but the bigger issue is if we end up needing all these adapters we've got to pay for them, and more specific adapters will probably be fairly expensive.

Here is a comparison table showing how Thunderbolt has changed over the years:

| Version | Maximum Speed | Maximum Power Output | Connector Type |

|---|---|---|---|

| Thunderbolt | 10Gbps | 10W | Mini Displayport |

| Thunderbolt 2 | 20Gbps | 10W | Mini Displayport |

| Thunderbolt 3 | 40Gbps | 100W | USB-C |

Notice any similarities between Thunderbolt 3 and USB-C that was announced on the new MacBook? That's because they will likely merge.

The conclusion

Here's my solution: make the one connector for all a reality, just keep the old connectors alongside this new connector, thus giving people, like me, the option to use older connectors without needing to buy the adapter. This keeps costs down, but also leaves users of older devices able to continue to use them. Don't cut out USB Type A and replace all mice and keyboards with USB-C connectors.

We cannot live in a world where we need to keep all of these dongles for everything, it's simply ineffective and expensive, especially when one breaks down. I believe because we have come from a world of loads of different connectors into a world wanting a single connector for all that we will be faced with many problems. This is particularly the case with industries such as the music industry, where devices of today really lack in the connectivity side of things. I mean the world got rid of the Gameport (or MIDI port) without any major problems, but for those who had purchased MIDI devices that used the Gameport-style connector as the input, they had to go out and buy adapters or get new devices. The change can come on too rapidly, especially for some. The slow but very painful disappearance of FireWire in the last few years has made it's mark, even for me since I can no longer connect my FireWire drives to my PC (I can with my Mac mini thankfully). And now companies are phasing out audio jacks and in particular the multi audio jack system, and all in favour of USB or single audio jack solutions.

Tesla, who just so happen to be one of my favourite companies, are unveiling their latest electric car - the Model 3. I am very excited by this mainly from a technology perspective but also from an environmental point of view.

Teslas current lineup of cars are absolutely stunning, and it may seem like a dream right now, but I am very interested in the range and some time in the future I would love to own one (it will likely have changed by the time I get around to looking at buying one).

Tesla are a fantastic company who build things to an outstanding degree as I noticed when I was in one of the Tesla Stores. They also innovate way more than other car manufacturers, so kudos to them.

I am excited by the Model 3 however as it will have a lower price point than the other models and it could possibly be the next car my parents buy. The future is electric and I'm hoping the Model 3 proves this further.

For the very first time since buying my 2012 Mac Mini in 2014 I have reformated it with a fresh OS X install. There was no particular reason for this other than wanting a fresh install of OS X with the very minimal install again - too many files on my system were taking up my drive, so it was just time. The system was still running absolutely as it was when I bought it, so it was nothing related to that. Just saying, Macs don't need that kind of reformat anyway.

Anyway, since this is the first reinstall of OS X on that machine, I thought I'd give my configuration script a try. It really is wonderful, and it's really all thanks to my friends Ben and Merlin that it's as good as this.

The script looks like:

ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)"

brew install rlwrap

brew install Caskroom/cask/smlnj

brew install Caskroom/cask/osxfuse

brew install sshfs

brew install python

brew install homebrew/x11/swi-prolog

echo "Insert Dropbox path:"

read input_variable

echo "export $DROPBOX=" >> ~.bash_profile

echo "$input_variable" >> ~.bash_profile

if [ ! -d ~/Pictures/Screenshots ]

then

mkdir ~/Pictures/Screenshots

fi

rm ~/Library/Preferences/com.apple.dock.plist

rm ~/Library/Preferences/com.apple.finder.plist

sudo cp "$DROPBOX/Mac configurator/configuration/com.apple.dock.plist" ~/Library/Preferences/com.apple.dock.plist

sudo cp "$DROPBOX/Mac configurator/configuration/com.apple.finder.plist" ~/Library/Preferences/com.apple.finder.plist

defaults write com.apple.screencapture location ~/Pictures/Screenshots;

killall SystemUIServer

So once I got my Mac up and running and Dropbox installed, I run this script to copy all the necessary files across to their places, which restores my Mac to how it should look (i.e. it makes Finder and the Dock identical across my Macs). I've highlighted line 12 because it is where my Dropbox directory is put into my Bash profile so that I can reference it from any script easily.

By the way, this is not me saying that Bash is beautiful, because the syntax is horrible. All I am saying is it is a pretty powerful little shell scripting language.

As I'm sure anyone who read my blog for technology related stuff will know, Moore's Law is a fundamental 'law' that defines that the speed of computers will double every two years. It's not entirely the case but it holds true for the majority of systems produced.

The law is more of a theory of a computer scientist called Gordon Moore, one of the founders of what is now Intel. It was theorised in 1965 and what it really stated was that the number of transistors that can be crammed in to one integrated circuit will double every two years.

Intel call this a tick in their 'tick-tock' cycle. Examples of Intel CPUs include the Sandy Bridge range (tick) when compared with the Ivy Bridge range (tock). Both of these ranges were based on the Sandy Bridge architecture. The Haswell architecture which was the next tick could fit twice as many transistors in the same size of integrated circuit, following Moore's Law.

But on the release of Broadwell, which was based on the Haswell micro-architecture and was the successor 22nm Haswell, we have arrived at transistors that are only 14nm in size, compared with Haswell's 22nm transistors this change is huge. The next step after 22nm according to the International Technology Roadmap for Semiconductors will come in at 10nm. Currently, Skylake, which is the current range of Intel APUs and is a tick in the tick tock cycle, is facing several problems with going further. For the very first time in the history of Intel's tick-tock cycle, there are going to be two ticks (tick-tick-tock). Why you may ask?

The answer is that Moore's Law no longer holds true with current fabrication techniques. In fact 10nm is posing such problems that it has been delayed until 2017. Cannonlake (formely Skymont), which will be the tock in the cycle will succeed the successor of Skylake, codenamed Kaby Lake. It will drop the size to 10nm. From here on however, there is considerable worry about whether or not we can go any further. We may see for a few years that computers cannot get any more powerful. What worries me is that the companies may use this to make money out of us at no extra cost to them (since the technology will change but the systems will be no more powerful).

So what's the next step then? Quantum computing? Chemical based computing? Biological computing? Good question.

For the foreseeable future I would imagine that quantum computers will be the future, since they currently already exist. What worries me about the future is how will devices we currently use (such as the world wide Internet) interface with these new devices? I worry greatly about this and how the transition will turn out.

Back in the day, when Netscape and Microsoft started the First Browser War, Internet Explorer and Netscape Communicator fought to become the most popular browser.

Ultimately, to many people's dislike Internet Explorer won and Netscape disappeared. Netscape Communicator evolved into Firefox. At this time Internet Explorer's share of the browser market kept growing, largely due to the fact that it was bundled with Windows until the EU decided to make it compulsory for Microsoft to include a way for users to change to other browsers easily.

Since then, I have become a web developer, and I stopped using Internet Explorer again in favour of Firefox and eventually Safari. I'm not the only one who stopped using Internet Explorer, however. Year after year the share for Internet Explorer has dropped. Here are the statistics that show this for November 2015 from W3 Schools:

| 2015 | Chrome | IE | Firefox | Safari | Opera |

|---|---|---|---|---|---|

| November | 67.4 % | 6.8 % | 19.2 % | 3.9 % | 1.5 % |

And here is a set of statistics from 2002, 13 years ago (when I used Netscape I'll have you know!)

| 2002 | AOL | IE | Netscape |

|---|---|---|---|

| November | 5.2 % | 83.4 % | 8.0 % |

But why is this the case?

Microsoft just didn't care

Microsoft was very bad at developing Internet Explorer between iterations, they thought because they had a huge market share that they wouldn't lose it. I only realised this after becoming a web developer myself, since developing for Internet Explorer all the way up to IE9 is very difficult.

Even if other browsers had features for a year or two, Internet Explorer would most likely not get these features for a long time after. Prefixed support wasn't even there. Microsoft, as always, just thought it was ok to just leave it.

Microsoft only cared when Internet Explorer started to disappear.

The future

Microsoft will have a lot of catching up to do with Microsoft Edge since Internet Explorer got them the bad name of the browsers. I personally do not see this happening in a way that will transform the share so that Microsoft has the upper edge again, but I can see them regaining some of the lost ground with it.

Edge is a fantastic browser, especially from Microsoft. Edge really does support cutting-edge technologies and implements most of the web standards well.