Did you know that in CSS, counters do not affect elements that are display : none? What I mean is that they do not increment inside an element with display : none.

I recently discovered this weird little anomaly whilst building in some updates to DragonDocs and I never knew this.

Dash was my main project for a long time, so developing content management systems is not something I have ever had any problems working on, and in fact, they are one of my favourite things to develop.

But now, something new, somewhat inspired by Dash, is about to hopefully become a big success. I'm working on a new major project that I started just this evening and it's set to be my biggest project yet.

Dash 2.0 will rebuild my four-year-in-development project from the ground up, with more focus on object-orientation.

As a web developer myself, I have been through a bunch of editors trying to find the right one and for the last few years, at least the editor of choice to me has been set.

Back in 2016, a friend at the time suggested that I use a different editor. I found that, at least for web development, Atom has been the editor of choice. I say this for many reasons.

The first is cross-platform. Whilst I was originally a Visual Studio user when I moved to Mac OS and Linux machines as my main computers, Visual Studio wasn't cross-platform. This meant I needed to do something different when I switched computers to allow me to edit on both machines.

The second is the number of packages available for it. I use a terminal package that allows me to have an in-built command line at the same time as editing. I use an FTP package to allow me to upload in real-time.

I stand by Atom being my favourite, but it's a difficult call. Visual Studio Code is a definite close second for me, and over the years since I first used both it and Atom, it's got a heck of a lot better.

According to jscharting.com's blog, 95% of web developers asked in a survey actually use either Visual Studio Code, Atom, Sublime, WebStorm, or VIM as their editor, meaning a huge number of people will be using either of those editors.

You may know that back before the release of the iPhone and the real mobile web, websites could be as large as 20MB in size. When the iPhone was released, it came with a web browser that could view the full web - not restricted to some WAP-based website. This was a problem for web developers at the time (I for one was not one of these until 2 years later) as it meant that users using their data allowances to view their websites would ultimately pay the price of running out of data in their given packages very quickly and would suffer slow download speeds (remember, the original iPhone didn't even have 3G, shockingly).

40% of users will leave a website if it has not loaded within three seconds which means that even though my website downloads in less than one second here in the UK, 40% of users not using 3G will leave the website. The iPhone shook it up. The main outcome of it was that websites were redeveloped with Flash removed, image files compressed, CSS and JavaScript files reduced as much as possible, the HTML content split into different files and the server-side processing sped up as much as possible. It was as though things had to go back the way to before broadband became a thing, but in reality, it was just making websites more efficient with what they had.

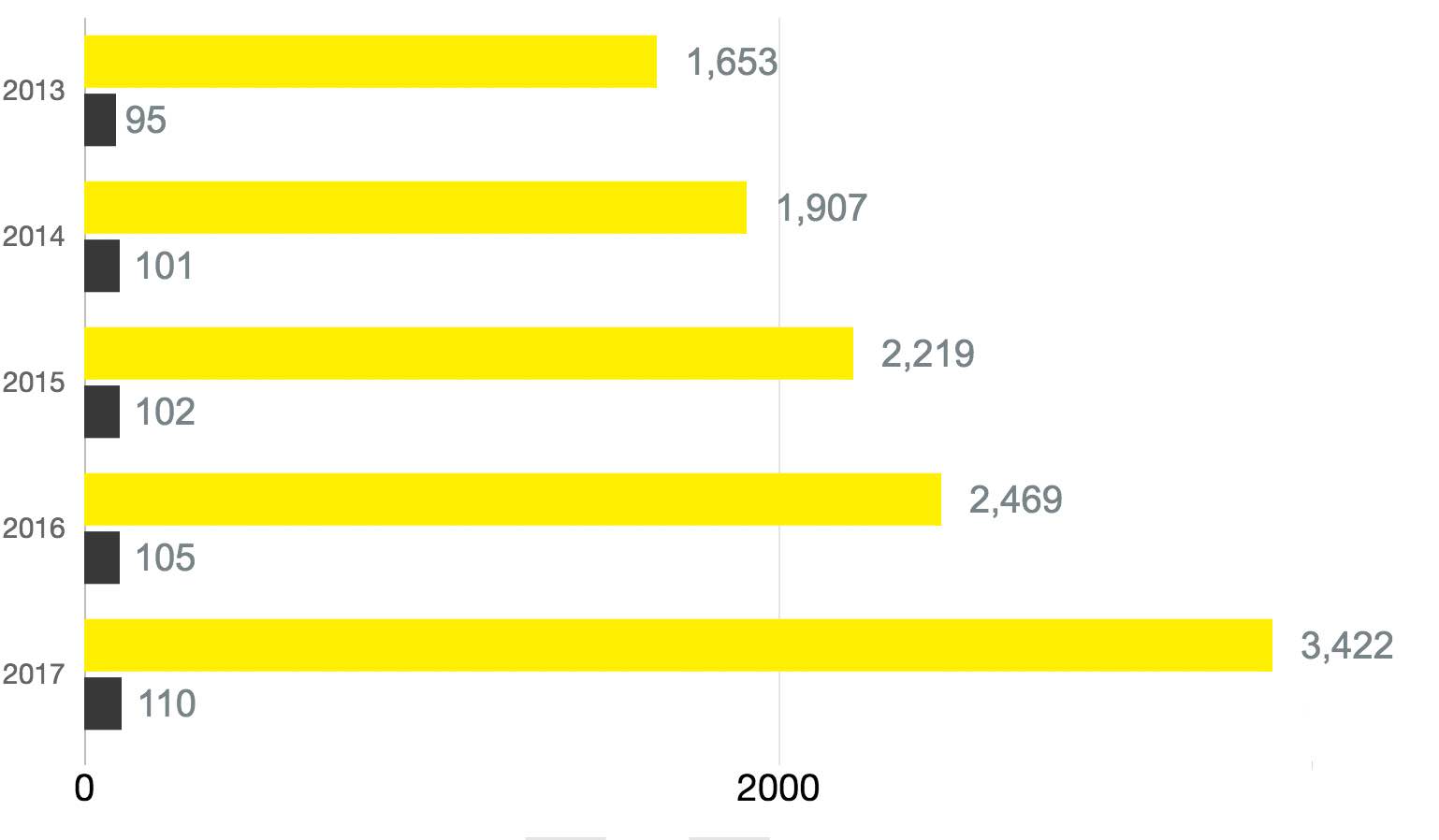

Pingdom's Year in Review

The main subject of this blog post is to discuss Pingdom's Year in Review for 2017. This article shows some slightly worrying statistics about how the web is becoming, again.

Perhaps the most worrying statistic is exactly what was described above being reversed - websites are becoming bigger again. See this graph from the report:

While it is true that mobile devices have faster connections thanks to 4G LTE and we now have faster broadband connections, it is still worrying. I say this because there are still many people who don't have faster than a 1Mbps download speed.

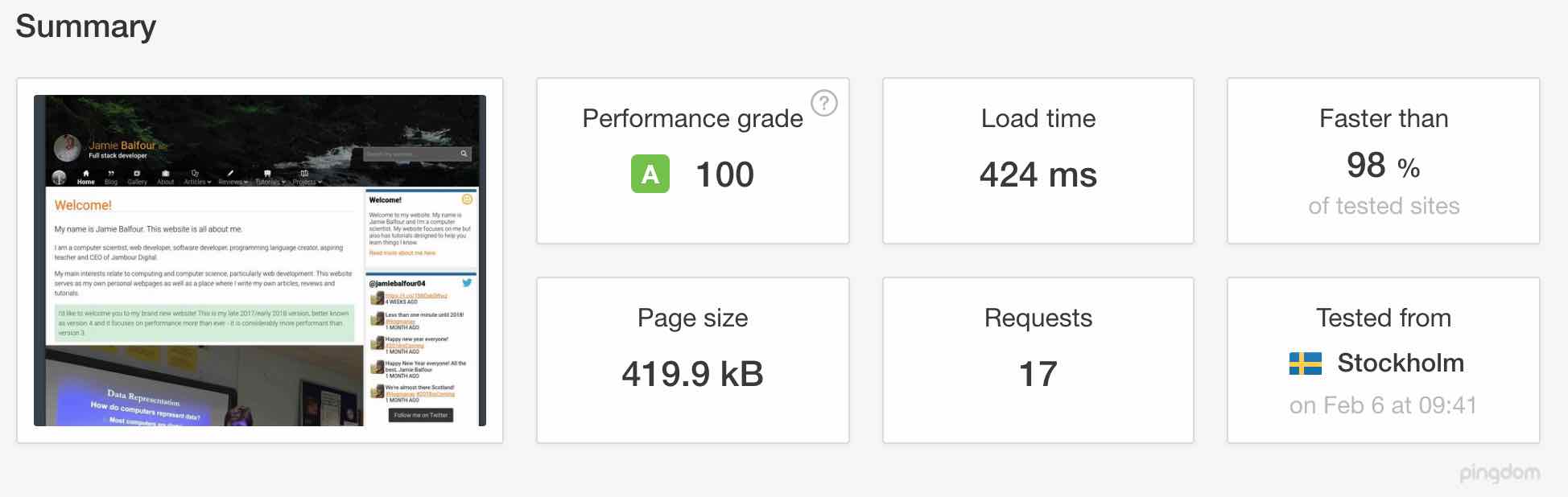

At the start of this year when I relaunched my website my main focus was on client-side performance, both in terms of JavaScript and in terms of download time. I managed to get my Pingdom result from 1.5 seconds to around 400ms making it faster than 98% of websites that Pingdom tests.

My concern was more about the data usage that it costs however for a user on a smartphone. My previous phone contract limited me to 1GB of data, and I would often get through that in a few days. My current phone contract does give me 20GB of data, but I can often see me going through about 5GB of that in a month.

As well as the amount of data being downloaded, the number of HTTP requests has gone through the roof. The graph before shows both the size of a website (yellow) and the number of requests (black) made by the website. 110 requests?! That's a lot of HTTP requests. I do get that my website is a personal website, but I do believe that the most important improvement to making a website fast and efficient is reducing the number of requests. Older browsers can only send up to 70 odd requests at once, and yes, older browsers like IE8 still have some market share and we do need to try and cater for them too.

Further down the article, and it's clear that the amount of content being downloaded into a website is increasingly getting bigger too. Particularly images and JavaScript. Now, too much of those results in a lot to download but more crucially, it means more processing, particularly in relation to JavaScript. JavaScript needs to be processed immediately when it is received and as a result, puts more strain on the computer. These days this is much less of a problem with things like the incredible V8 Engine in Google Chrome and Safari's Nitro Engine. But all that processing needs to be done anyway.

The result of all of this labour on the CPU is that we have slower websites but also we drain the battery of mobile devices much faster. Perhaps this is the main reason why websites should consider what they are doing because the batteries in our smartphones aren't all that good after all (https://9to5mac.com/2017/09/25/ios-11-battery-life-problems/).

Conclusion

My concluding remarks on this are we are not going the right way about this. Building a website should not be about making it as functional as possible whilst sacrificing speed. There has to be the balance and it appears we are not doing that at the moment.

You can read more about this in the review at https://www.pingdom.com/2017.

Well, perhaps we should start with what made my old site slow. In this post, I'm going to talk about lessons I've learned and lessons you can also learn, in particular with PHP.

- PHP 5.3 - in version 3.0 I used PHP 5.3 on my website. I did eventually move to PHP 7.0 but I had to revert some parts of the website back to PHP 5.3 due to compatibility issues. PHP 7.0 is considerably faster and has a new OP Cache that improves performance further.

- Database calls - there were tons more database calls on my old website and they weren't optimised. I now make sure that all database calls are to the same connection using connection pooling and use prepared statements on all statements.

- Deep integration with slower services - a big issue for my old version 3.x website. By including the Facebook API and so on I was burdening my server with things it really didn't need.

- File reads - and lots of them. Lots of file reads slowed my server and had a detrimental effect on performance due to file locks. This was perhaps the biggest reason my old site was slow.

What makes my new website fast?

- PHP 7.0 and OP Cache - PHP 7.0 is a lot faster and it makes no difference to my new website since it's been heavily optimised for PHP 7.0. OP Cache also reduces the amount of time my server spends parsing PHP and takes some of the load away. (This article explains this https://blog.famzah.net/2016/02/09/cpp-vs-python-vs-perl-vs-php-performance-benchmark-2016/)

- Database calls are, as previously mentioned, faster now due to the use of connection pooling and prepared statements.

- Less third-party services - my website no longer uses the Facebook API. I've also cut out several other APIs that were being experimented with.

- Far fewer file reads - in fact, there are only two file reads now - one for the very short head and one for the very short foot.

- Object-oriented design - something I've always loved about programming is OO design, and when I first developed version 3.0 I had no idea PHP had an OO model of development. Now in 2018, under the redevelopment of the website, I decided it was best to make it object-oriented. This improves the ease of development but also makes it perform better.

- Far less CSS and JS - in terms of front-end performance, the site loads in less than 500ms from a UK based connection (where my server is) and this is only possible due to the optimisations that have been made. I've cut both the CSS and JavaScript files by considerable amounts, even as much as half. This has been particularly successful due to the inclusion of the Girder framework which means less focus on the responsive design that was, I will admit, quite cumbersome before. One of the biggest reasons for the smaller CSS is because I no longer spend time overwriting other CSS selectors and properties because my new website abolishes things like Magnific Popup and utilises solely on my own CSS. This change means that there is less overwriting and more specific CSS. This also means that less time is spent parsing the CSS and makes it better in both battery life usage and performance on mobile devices.

A final word about my site

It's not at the stage where it's finished and I'm also looking at ways to improve performance even more, but at the same time, I'm trying to bring my website back to how it was before in terms of functionality. For instance, I've still not had the time to bring a search box on to the masthead of the site and I aim to get that done soon. And I want to get the Twitter widget a bit more interactive as well.

Another new contract and another new technology.

This month has been pretty good for me obtaining contracts; just finishing one at the start of the month, getting a new one at the start of the month and a week ago and a potential fourth one this following week. I aim to provide my clients with the best possible service I can, and if I say so myself, I think they are pretty happy with what I offer them at present.

For my latest contract, I've been working with Stripe, which in case you haven't heard, is a fantastic way to build in a payment method into a website. I have used PayPal's API in the past and found it to be very easy to handle, however, some websites demand more than just PayPal due to users not having access to PayPal or whatever.

With the latest contract, I am working on, reviewing the requirements specification document we formed, it's completely necessary to have a seamless, transparent payment system that allows users to quickly input their details including payment details. For this, I turned back to Stripe, which I have used briefly in an experimental website I was developing a few years back. Stripe is secure and easy to use. It also looks very professional.

Let's talk API stuff now. Stripe provides an exemplary API, however, I decided to use the PHP wrapper found on GitHub to make this lightweight. I then made my own wrapper that transforms it into a reusable script across all of the sites I host.

The great thing about the API is there is no redirect or required way in which you process data, and you choose what you want to do with the finalised output of the form. In fact, you don't even need to take payment (that's a bit silly but it's true). You need to write a simple script that will process it after.

The main benefit of this is that you can do all sorts of things prior or after the form submission such as sending an email of receipt or a notification of a purchase on the website. You can also add this to a database that stores what a user has purchased.

But the main thing about Stripe is its ease of deployment. It only took me about 2 hours to get the whole site up and running with a test version of Stripe.

In the last few months since I setup my own server I've been experiencing something I didn't even know might have happened before now.

I'm talking about brute force attacks on each of the websites I host. None of them are at all clever and I've been mitigating these problems recently anyway.

But before I had root access to my server I had no idea that these attacks happened so often. The last few days I have been blocking several IP addresses from SSH and website visits on the sites I host, but I'm starting to notice a trend.

In fact, this trend relates to a post I made when I first moved to WordPress. I haven't used WordPress for years and I'm happy to say that, because I wasn't a huge fan of WordPress. I ended my WordPress part of my website at the end of 2013 and I haven't looked back. However, my websites are still getting constant requests to access one certain file that doesn't exist. I'm talking about these errors in my Apache error logs:

- /var/log/apache2/access.log.1:IP_ADDRESS - - [07/Nov/2017:12:38:28 +0000] "GET /wp-login.php HTTP/1.1" 404 28038 "-" "Mozilla/5.0 (Windows NT 6.1; WOW64; rv:40.0) Gecko/20100101 Firefox/40.1"

- /var/log/apache2/error.log.1:[Tue Nov 07 05:37:02.133215 2017] [:error] [pid 30560] [client IP_ADDRESS] script 'wp-login.php' not found or unable to stat

There are hundreds of them! As a result, I've decided since none of my customers or myself use or will use WordPress, I'm going to block all wp-login requests.

If there's one thing you should take from this post, check your logs for the same issue!

First off, I have been developing websites since early 2010, and then I officially became a web developer in late 2012. When I say that I became a web developer, it's because that was when I saw the change from being a standard desktop software developer (look at my previous projects such as Painter Pro and Wonderword) to a web developer and got my first contract in January 2013. Since then I've come a long way.

When I first learned web development in 2010, I did everything with tables - because I didn't know much about CSS and found it a scary concept. As I learned Java and PHP in my own spare time I eventually picked up CSS as well. This was when I started to actually think maybe web development was the right place for me. People started to notice my website and my works such as BalfBar and BalfBlog and so on. Now, years later my website development has become a bigger part of my life - I take on jobs again.

I left my job at the start of October with the intention of doing freelance work for a while, at least until I get into teacher training because I know it's where my skills lie (and because I couldn't cope with the travel to my previous job).

However, a few years ago I spoke to someone who had been developing websites (whilst transferring some sites to me) for some time and took some important advice. We discussed hosting sites ourselves and he told me that clients who host with you will be easier to manage than those who host with someone else, and they can get a much better tailored service. He claimed that he had been developing websites for about 7 years and manages them all himself.

This bit of advice stuck with me for about 5 years but I didn't really act on it. Now after all those years of using different accounts for each website I built, I'm now managing all of my clients' websites. This makes both of our lives easier.

Be a good developer and host

I want to be a good web developer and host to my clients and I do this by offering them everything for so little compared with competition. My hosting and services are considerably cheaper than the competition but I currently only offer them as a single package - you can get a website created by me and I'll host it, the two are not mutually exclusive so you can no longer get a site by me and not have it hosted by me.

I offer a range of services, and maintain sites to keep them up to date with the times. I recently refurbished two of my oldest developed sites at no cost to the owner. You see, I actually enjoy this kind of thing, so doing this is a favour to me too.

To be a good host, I have a range of new things. For instance, I moved everyone on my server from PHP 5.5 to 5.6 and now to PHP 7.0 and have enabled the OP cache and so on. I have developed a bunch of reusable tools for users and I'm prepared to install other tools that users need. I've spent a lot of my personal time learning about web server maintenance and I've become really knowledgeable about it so that my clients can experience the best.

Future updates

My brother and I have been discussing a business venture that would have a significant performance improvement for all of the hosted websites at no extra cost. To be able to achieve this, however, we will need to get enough websites to host so that we don't end up paying for something that we make a loss on.

When I first started my website in 2010 I never once thought I'd be interested in web development and server stuff. I only actually started my website as a way of getting information about my software out there. I never saw it as a way for me to learn a new technology or to experiment with new things.

As time has gone on, my demand for new stuff on my web server has gone up and now I'm at the stage where I've become not only a competent web developer, but I now have a lot of experience with Linux servers (my job is spent half of the time doing things like server administration in Linux).

So without further ado, the main subject of this post. I bought a VPS package very recently and started a new website.

Well, I will one day transfer this website across to it, but for now I am experimenting with one or two things.

So I decided to compare my current shared hosting package with the VPS package in terms of performance using a PHP script shown below:

time php -r 'for($i = 0; $i < 100000; $i++) echo $i;'

and here's the results:

real 0m0.122s

user 0m0.038s

sys 0m0.078s

real 0m0.880s

user 0m0.144s

sys 0m0.248s

As you can see, the first set of results are much better and that's because they are the VPS results. Despite the VPS package only having 512MB of RAM and 1 core vs 3GB of RAM and 4 cores on the shared package, the performance is still much higher (I was originally concerned there might be a problem with performance here because of the lack of cores).

So there you have it, the difference is quite substantial and may mean that I change my own site to VPS, particularly because it will allow me to experiment with things.

NPAPI or Netscape Plugin Application Programming Interface was the norm for a very long time in web browsers. It was a single standard that allowed all browsers to use plugins. But plugins have plauged the web for a long time too. One of the most well known plugins, Adobe Flash, had become pretty much everywhere, requiring users to download a plugin for the system. It used NPAPI. On top of this, plugins were cumbersome to develop and meant developers needed to know several in order to achieve the results they wanted. Now the web is finally moving away from a plugin interface to a much more standards based interface.

NPAPI was the interface (a set of methods which each plugin must implement) which all plugins complied with. This was originally developed by Netscape, one of the original companies to develop a web browser and Microsoft's competitor in the first browser war. Netscape developed many standards and one of them was this plugin interface that has left us in the messy situation we are in now.

NPAPI has been around for a long time, but last year was supposed to be the end of it. In 2015 Mozilla announced they had plans to drop NPAPI by the end of 2016. This was later brought back to March 2017. Chrome has already dropped NPAPI and did so in September of 2015, only after turning support off by default in April that same year. Google cited that it "has become a leading cause of hangs, crashes, security incidents, and code complexity" thus that the older architecture of it needs "to evolve the standards-based web platform". It's important to note that NPAPI is an architecture from the 90s when the web began to take shape and at that point we were using HTML 3.2 and lower. Since then HTML5, CSS3 and JavaScript have all brought huge improvements to the standards-based web.

Many plugins already exist that take advantage of NPAPI including Flash and the Java applet plugin. But both of these can be replaced by much more modern solutions.

By removing the NPAPI browser developers are encouraging standards. They are making it more difficult for those who develop these plugins to make them a part of the future. By doing this they are offering a safer web environment for everyone. They are also ensuring that there is no longer the complicated mess of choice that Netscape and Microsoft once supported through the NPAPI and that we live in a standards controlled environment where no one company owns the web.

A standards based website is the way to go and older websites need to update to catch up with standards. Nobody has time for these older websites that rely on these plugins now, they themselves are slow and ineffective and need to catch up.